It seems like it’s been cold for almost the entire winter this year, with the exception of the few days last week when we got more than 16 inches of snow. Unfortunately, it’s been hard to enjoy it, with daily high temperatures typically well below -20°F.

Let’s see how this winter ranks among the early-season Fairbanks winters going back to 1904. To get an estimate of how cold the winter is, I’m adding together all the daily average temperatures (in degrees Celsius) for each day from October 1st through yesterday. Lower values for this sum indicate colder winters.

Here’s the SQL query. The CASE WHEN stuff is to include the recent data that isn’t in the database I was querying.

SELECT year,

CASE WHEN year = 2012 THEN cum_deg - 112 ELSE cum_deg END AS cum_deg,

rank() OVER (

ORDER BY CASE WHEN year = 2012 THEN cum_deg - 112 ELSE cum_deg END

) AS rank

FROM (

SELECT year, round(sum(tavg_c) AS cum_deg

FROM (

SELECT extract(year from dte) AS year,

extract(doy from dte) AS doy,

tavg_c

FROM ghcnd_obs

WHERE station_id = 'USW00026411'

ORDER BY year, doy

) AS foo

WHERE doy between extract(doy from '2011-10-01'::date)

and extract(doy from '2012-12-19'::date)

GROUP BY year ORDER BY year

) AS bar

ORDER by rank;

And the results: this has been the fifth coldest early-season winter since 1904.

| O/N/D rank | year | O/N/D cumulative °C | N/D rank |

|---|---|---|---|

| 1 | 1917 | -1550 | 1 |

| 2 | 1956 | -1545 | 4 |

| 3 | 1977 | -1451 | 3 |

| 4 | 1975 | -1444 | 5 |

| 5 | 2012 | -1388 | 7 |

| 6 | 1946 | -1380 | 2 |

| 7 | 1999 | -1337 | 12 |

| 8 | 1966 | -1305 | 9 |

| 9 | 1942 | -1303 | 6 |

| 10 | 1935 | -1298 | 10 |

In addition to the ranks for October through today (O/N/D rank in the table), the last column (N/D rank) shows the same calculation without October temperatures. It’s always a good idea to examine how well a relationship holds up when the interval is manipulated in order to see if the results are an artifact of the choice of period. In this case, the rankings change, but not dramatically.

Tonight we may cross -50°F for the first time this year at our house, but even without the very cold temperatures predicted (but not record cold) through the weekend, the start of the 2012/2013 winter has been exceptionally chilly.

It’s now December 1st and the last time we got new snow was on November 11th. In my last post I looked at the lengths of snow-free periods in the available weather data for Fairbanks, now at 20 days. That’s a long time, but what I’m interested in looking at today is whether the monthly pattern of snowfall in Fairbanks is changing.

The Alaska Dog Musher’s Association holds a series of weekly sprint races starting at the beginning of December. For the past several years—and this year—there hasn’t been enough snow to hold the earliest of the races because it takes a certain depth of snowpack to allow a snow hook to hold a team back should the driver need to stop. I’m curious to know if scheduling a bunch of races in December and early January is wishful thinking, or if we used to get a lot of snow earlier in the season than we do now. In other words, has the pattern of snowfall in Fairbanks changed?

One way to get at this is to look at the earliest data in the “winter year” (which I’m defining as starting on September 1st, since we do sometimes get significant snowfall in September) when 12 inches of snow has fallen. Here’s what that relationship looks like:

And the results from a linear regression:

Call:

lm(formula = winter_doy ~ winter_year, data = first_foot)

Residuals:

Min 1Q Median 3Q Max

-60.676 -25.149 -0.596 20.984 77.152

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -498.5005 462.7571 -1.077 0.286

winter_year 0.3067 0.2336 1.313 0.194

Residual standard error: 33.81 on 60 degrees of freedom

Multiple R-squared: 0.02793, Adjusted R-squared: 0.01173

F-statistic: 1.724 on 1 and 60 DF, p-value: 0.1942

According to these results the date of the first foot of snow is getting later in the year, but it’s not significant, so we can’t say with any authority that the pattern we see isn’t just random. Worse, this analysis could be confounded by what appears to be a decline in the total yearly snowfall in Fairbanks:

This relationship (less snow every year) has even less statistical significance. If we combine the two analyses, however, there is a significant relationship:

Call:

lm(formula = winter_year ~ winter_doy * snow, data = yearly_data)

Residuals:

Min 1Q Median 3Q Max

-35.15 -11.78 0.49 14.15 32.13

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 1.947e+03 2.082e+01 93.520 <2e-16 ***

winter_doy 4.297e-01 1.869e-01 2.299 0.0251 *

snow 5.248e-01 2.877e-01 1.824 0.0733 .

winter_doy:snow -7.022e-03 3.184e-03 -2.206 0.0314 *

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 17.95 on 58 degrees of freedom

Multiple R-squared: 0.1078, Adjusted R-squared: 0.06163

F-statistic: 2.336 on 3 and 58 DF, p-value: 0.08317

Here we’re “predicting” winter year based on the yearly snowfall, the first date where a foot of snow had fallen, and the interaction between the two. Despite the near-significance of the model and the parameters, it doesn’t do a very good job of explaining the data (almost 90% of the variation is unexplained by this model).

One problem with boiling the data down into a single (or two) values for each year is that we’re reducing the amount of data being analyzed, lowering our power to detect a significant relationship between the pattern of snowfall and year. Here’s what the overall pattern for all years looks like:

And the individual plots for each year in the record:

Because “winter month” isn’t a continuous variable, we can’t use normal linear regression to evaluate the relationship between year and monthly snowfall. Instead we’ll use multinominal logistic regression to investigate the relationship between which month is the snowiest, and year:

library(nnet)

model <- multinom(data = snowiest_month, winter_month ~ winter_year)

summary(model)

Call:

multinom(formula = winter_month ~ winter_year, data = snowiest_month)

Coefficients:

(Intercept) winter_year

3 30.66572 -0.015149192

4 62.88013 -0.031771508

5 38.97096 -0.019623059

6 13.66039 -0.006941225

7 -68.88398 0.034023510

8 -79.64274 0.039217108

Std. Errors:

(Intercept) winter_year

3 9.992962e-08 0.0001979617

4 1.158940e-07 0.0002289479

5 1.120780e-07 0.0002218092

6 1.170249e-07 0.0002320081

7 1.668613e-07 0.0003326432

8 1.955969e-07 0.0003901701

Residual Deviance: 221.5413

AIC: 245.5413

I’m not exactly sure how to interpret the results, but typically you’re looking to see if the intercepts and coefficients are significantly different from zero. If you look at the difference in magnitude between the coefficients and the standard errors, it appears they are significantly different from zero, which would imply they are statistically significant.

In order to examine what they have to say, we’ll calculate the probability curves for whether each month will wind up as the snowiest month, and plot the results by year.

fit_snowiest <- data.frame(winter_year = 1949:2012)

probs <- cbind(fit_snowiest, predict(model, newdata = fit_snowiest, "probs"))

probs.melted <- melt(probs, id.vars = 'winter_year')

names(probs.melted) <- c('winter_year', 'winter_month', 'probability')

probs.melted$month <- factor(probs.melted$winter_month)

levels(probs.melted$month) <- \

list('oct' = 2, 'nov' = 3, 'dec' = 4, 'jan' = 5, 'feb' = 6, 'mar' = 7, 'apr' = 8)

q <- ggplot(data = probs.melted, aes(x = winter_year, y = probability, colour = month))

q + theme_bw() + geom_line(size = 1) + scale_y_continuous(name = "Model probability") \

+ scale_x_continuous(name = 'Winter year', breaks = seq(1945, 2015, 5)) \

+ ggtitle('Snowiest month probabilities by year from logistic regression model,\n

Fairbanks Airport station') \

+ scale_colour_manual(values = \

c("violet", "blue", "cyan", "green", "#FFCC00", "orange", "red"))

The result:

Here’s how you interpret this graph. Each line shows how likely it is that a month will be the snowiest month (November is always the snowiest month because it always has the highest probabilities). The order of the lines for any year indicates the monthly order of snowiness (in 1950, November, December and January were predicted to be the snowiest months, in that order), and months with a negative slope are getting less snowy overall (November, December, January).

November is the snowiest month for all years, but it’s declining, as is snow in December and January. October, February, March and April are increasing. From these results, it appears that we’re getting more snow at the very beginning (October) and at the end of the winter, and less in the middle of the winter.

Footprints frozen in the Creek

Reading today’s weather discussion from the Weather Service, they state:

LITTLE OR NO SNOWFALL IS EXPECTED IN THE FORECAST AREA FOR THE NEXT WEEK.

The last time it snowed was November 11th, so if that does happen, it will be at least 15 days without snow. That seems unusual for Fairbanks, so I checked it out.

Finding the lengths of consecutive events is something I’ve wondered how to do in SQL for some time, but it’s not all that difficult if you can get a listing of just the dates where the event (snowfall) happens. Once you’ve got that listing, you can use window functions to calculate the intervals between dates (rows), eliminate those that don’t matter, and rank them.

For this exercise, I’m looking for days with more than 0.1 inches of snow where the maximum temperature was below 10°C. And I exclude any interval where the end date is after March. Without this exclusion I’d get a bunch of really long intervals between the last snowfall of the year, and the first from the next year.

Here’s the SQL (somewhat simplified), using the GHCN-Daily database for the Fairbanks airport station:

SELECT * FROM (

SELECT dte AS start,

LEAD(dte) OVER (ORDER BY dte) AS end,

LEAD(dte) OVER (ORDER BY dte) - dte AS interv

FROM (

SELECT dte

FROM ghcnd_obs

WHERE station_id = 'USW00026411'

AND tmax < 10.0

AND snow > 0

) AS foo

) AS bar

WHERE extract(month from foo.end) < 4

AND interv > 6

ORDER BY interv DESC;

Here’s the top-8 longest periods:

| Start | End | Days |

|---|---|---|

| 1952‑12‑01 | 1953‑01‑19 | 49 |

| 1978‑02‑08 | 1978‑03‑16 | 36 |

| 1968‑02‑23 | 1968‑03‑28 | 34 |

| 1969‑11‑30 | 1970‑01‑02 | 33 |

| 1959‑01‑02 | 1959‑02‑02 | 31 |

| 1979‑02‑01 | 1979‑03‑03 | 30 |

| 2011‑02‑26 | 2011‑03‑27 | 29 |

| 1950‑02‑02 | 1950‑03‑03 | 29 |

Kinda scary that there have been periods where no snow fell for more than a month!

Here’s how many times various snow-free periods longer than a week have come since 1948:

| Days | Count |

|---|---|

| 7 | 39 |

| 10 | 32 |

| 9 | 30 |

| 8 | 23 |

| 12 | 17 |

| 11 | 17 |

| 13 | 12 |

| 18 | 10 |

| 15 | 8 |

| 14 | 8 |

We can add one more to the 8-day category as of midnight tonight.

Early-season ski from work

Yesterday a co-worker and I were talking about how we weren’t able to enjoy the new snow because the weather had turned cold as soon as the snow stopped falling. Along the way, she mentioned that it seemed to her that the really cold winter weather was coming later and later each year. She mentioned years past when it was bitter cold by Halloween.

The first question to ask before trying to determine if there has been a change in the date of the first cold snap is what qualifies as “cold.” My officemate said that she and her friends had a contest to guess the first date when the temperature didn’t rise above -20°F. So I started there, looking for the month and day of the winter when the maximum daily temperature was below -20°F.

I’m using the GHCN-Daily dataset from NCDC, which includes daily minimum and maximum temperatures, along with other variables collected at each station in the database.

When I brought in the data for the Fairbanks Airport, which has data available from 1948 to the present, there was absolutely no relationship between the first -20°F or colder daily maximum and year.

However, when I changed the definition of “cold” to the first date when the daily minimum temperature is below -40, I got a weak (but not statistically significant) positive trend between date and year.

The SQL query looks like this:

SELECT year, water_year, water_doy, mmdd, temp

FROM (

SELECT year, water_year, water_doy, mmdd, temp,

row_number() OVER (PARTITION BY water_year ORDER BY water_doy) AS rank

FROM (

SELECT extract(year from dte) AS year,

extract(year from dte + interval '92 days') AS water_year,

extract(doy from dte + interval '92 days') AS water_doy,

to_char(dte, 'mm-dd') AS mmdd,

sum(CASE WHEN variable = 'TMIN'

THEN raw_value * raw_multiplier

ELSE NULL END

) AS temp

FROM ghcnd_obs

INNER JOIN ghcnd_variables USING(variable)

WHERE station_id = 'USW00026411'

GROUP BY extract(year from dte),

extract(year from dte + interval '92 days'),

extract(doy from dte + interval '92 days'),

to_char(dte, 'mm-dd')

ORDER BY water_year, water_doy

) AS foo

WHERE temp < -40 AND temp > -80

) AS bar

WHERE rank = 1

ORDER BY water_year;

I used “water year” instead of the actual year because the winter is split between two years. The water year starts on October 1st (we’re in the 2013 water year right now, for example), which converts a split winter (winter of 2012/2013) into a single year (2013, in this case). To get the water year, you add 92 days (the sum of the days in October, November and December) to the date and use that as the year.

Here’s what it looks like (click on the image to view a PDF version):

The dots are the observed date of first -40° daily minimum temperature for each water year, and the blue line shows a linear regression model fitted to the data (with 95% confidence intervals in grey). Despite the scatter, you can see a slightly positive slope, which would indicate that colder temperatures in Fairbanks are coming later now, than they were in the past.

As mentioned, however, our eyes often deceive us, so we need to look at the regression model to see if the visible relationship is significant. Here’s the R lm results:

Call:

lm(formula = water_doy ~ water_year, data = first_cold)

Residuals:

Min 1Q Median 3Q Max

-45.264 -15.147 -1.409 13.387 70.282

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -365.3713 330.4598 -1.106 0.274

water_year 0.2270 0.1669 1.360 0.180

Residual standard error: 23.7 on 54 degrees of freedom

Multiple R-squared: 0.0331, Adjusted R-squared: 0.01519

F-statistic: 1.848 on 1 and 54 DF, p-value: 0.1796

The first thing to check in the model summary is the p-value for the entire model on the last line of the results. It’s only 0.1796, which means that there’s an 18% chance of getting these results simply by chance. Typically, we’d like this to be below 5% before we’d consider the model to be valid.

You’ll also notice that the coefficient of the independent variable (water_year) is positive (0.2270), which means the model predicts that the earliest cold snap is 0.2 days later every year, but that this value is not significantly different from zero (a p-value of 0.180).

Still, this seems like a relationship worth watching and investigating further. It might be interesting to look at other definitions of “cold,” such as requiring three (or more) consecutive days of -40° temperatures before including that period as the earliest cold snap. I have a sense that this might reduce the year to year variation in the date seen with the definition used here.

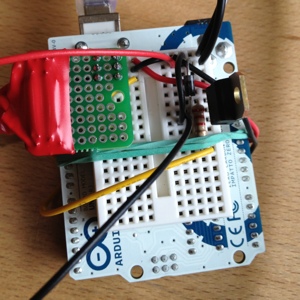

After writing my last blog post I decided I really should update the code so I can control the temperature thresholds without having to rebuild and upload the code to the Arduino. That isn’t a big issue when I have easy access to the board and a suitable computer nearby, but doing this over the network is dangerous because I could brick the board and wouldn’t be able to disconnect the power or press the reset button. Also, the default position of the transistor is off, which means that bad code loaded onto the Arduino shuts off the fan.

But I will have a serial port connection through the USB port on the server (which is how I will monitor the status of the setup), and I can send commands at the same time I’m receiving data. Updating the code to handle simple one-character messages is pretty easy.

First, I needed to add a pair of variables to store the thresholds, and initialize them to their defaults. I also replaced the hard-coded values in the temperature difference comparison section of the program with these variables.

int temp_diff_full = 6;

int temp_diff_half = 2;

Then, inside the main loop, I added this:

// Check for inputs

while (Serial.available()) {

int ser = Serial.read();

switch (ser) {

case 70: // F

temp_diff_full = temp_diff_full + 1;

Serial.print("full speed = ");

Serial.println(temp_diff_full);

break;

case 102: // f

temp_diff_full = temp_diff_full - 1;

Serial.print("full speed = ");

Serial.println(temp_diff_full);

break;

case 72: // H

temp_diff_half = temp_diff_half + 1;

Serial.print("half speed = ");

Serial.println(temp_diff_half);

break;

case 104: // h

temp_diff_half = temp_diff_half - 1;

Serial.print("half speed = ");

Serial.println(temp_diff_half);

break;

case 100: // d

temp_diff_full = 6;

temp_diff_half = 2;

Serial.print("full speed = ");

Serial.println(temp_diff_full);

Serial.print("half speed = ");

Serial.println(temp_diff_half);

break;

}

}

This checks to see if there’s any data in the serial buffer, and if there is, it reads it one character at a time. Raising and lowering the full speed threshold by one degree can be done by sending F to raise and f to lower; the half speed thresholds are changed with H and h, and sending d will return the values back to 6 and 2.